In recent years, we’ve witnessed major technology innovations in the area of digital commerce with a shift from traditional monolithic, server-based architectures towards cloud native solutions and, more recently, “headless” API first platforms. What seems to be the main driver behind these innovations is the need for increased flexibility and agility in how brands must deliver innovative customer experiences in a fast-changing digital retail environment. While the adoption of these consumer facing technologies has accelerated in recent years, the “pre-commerce” technology stack has seen little innovation. In that space, monolithic PLM still reigns as the dominant architecture within the RFA industry.

So, we must ask ourselves, will monolithic PLM solutions go through a similar architectural evolution and, more fundamentally, are they still fit for purpose in today’s quickly evolving go-to-market process landscape? To answer these questions and understand where we are today, we must first look back at a quick history of monolithic PLM.

The legacy of monolithic PLM

Monolithic PLM solutions emerged in the early 2000s amidst the advent and democratization of web-oriented technologies and programming languages. These made it possible to develop sophisticated web server-based applications with domain specific data models and rich user interfaces that could be accessed from anywhere with a browser. This was seen as revolutionary in comparison to AS400 era homegrown applications with often rudimentary user interfaces which had to run on terminal clients within a company’s internal network infrastructure.

This led to the genesis of a first generation of retail-specific PLM solutions, ushering the RFA industry into its first major wave of digital transformation. Around that time, many retailers had outgrown their internally developed solutions or were attempting to systemize their inefficient document-based processes. Monolithic PLMs offered a compelling value proposition: the ability to centralize all product development data and processes under one all-encompassing, robust enterprise application. The “single source of truth” motto became the key selling point and business driver behind most PLM implementation initiatives.

Over the next two decades, monolithic PLM solutions matured, significantly increasing in scope and complexity in response to an ever-growing list of requirements from a diverse set of retailers attempting to achieve the elusive “single source of truth”. What started essentially as a tool to manage CADs and tech packs turned into an all-in-one solution to support a massive process landscape, ranging from managing component libraries to quality assurance and custom compliance, with everything in between. This requirement proliferation spurred a rat race amongst PLM vendors, each jockeying to capture market share by bloating their solutions with new features, many of them more suitable for flashy demos than real life use. In a way, monolithic solutions became sort of ‘jacks of all trades’ and ‘masters of none’.

The increasing size and complexity of monolithic PLMs made their implementation and maintenance difficult and costly, resulting in long innovation cycles. In monolithic systems, the components of the application are tightly coupled, creating a high level of interdependencies between the front end, back end, database layer and other 3rd party components. This means that anytime a change is made to the application or any of its internal components, the entire system needs to be re-tested and deployed all at once. This typically results in infrequent deployments which make it difficult to do true agile development. This also means that individual parts of a monolithic application cannot be replaced without major refactoring, preventing software vendors from leveraging the latest and greatest in web technologies, notably on the front end side. In short, monolithic PLMs are slow, expensive and becoming outdated.

These problems were exacerbated by another flaw: monolithic PLMs tend to be extremely complex on the inside but expose very little in the way of APIs to the outside world. Integrations are often limited and typically require custom development and the use of middlewares. The lack of native connectivity also prevents retailers from fully leveraging emerging capabilities such as machine learning and artificial intelligence which rely on a constant stream of information. By nature, monolithic PLMs are silos of product development data, limiting their value as part of a modern connected enterprise.

Despite the challenges, monolithic PLMs served their intended purpose over the years and, in many ways, provided a much needed sense of structure and control across mission critical product development processes. They were the perfect solution to the original problem: eliminate process redundancy and technology fragmentation within increasingly complex product development organizations.

An inconvenient “source of truth”

Fast forward 20 years, and the technology foundation that underpins monolithic PLMs has seen very little evolution. The RFA industry, however, has. In that timespan, we have witnessed dramatic changes in how brands go to market: from the rise of digital channels and fast fashion to a shift towards private label design and vendor-driven development. This meant retailers had to constantly re-align their processes to adapt to these new complex business models. In this climate of change, agility and resilience became the most important qualities to any product development organization.

Alas, the “single source of truth” mantra that was once heralded as a key PLM benefit slowly started to become a limitation. The structure and control that came with it arguably hinder organizations’ ability to be agile. Aligning an entire business on a rigid workflow-driven process is no longer a priority. Instead, retailers need the ability to quickly pivot their operations and ways of working to support channel specific processes. Monolithic PLMs were never very well suited to support omnichannel in the first place. After all, they originated in the era of bricks and mortar and rigid seasonal deliveries.

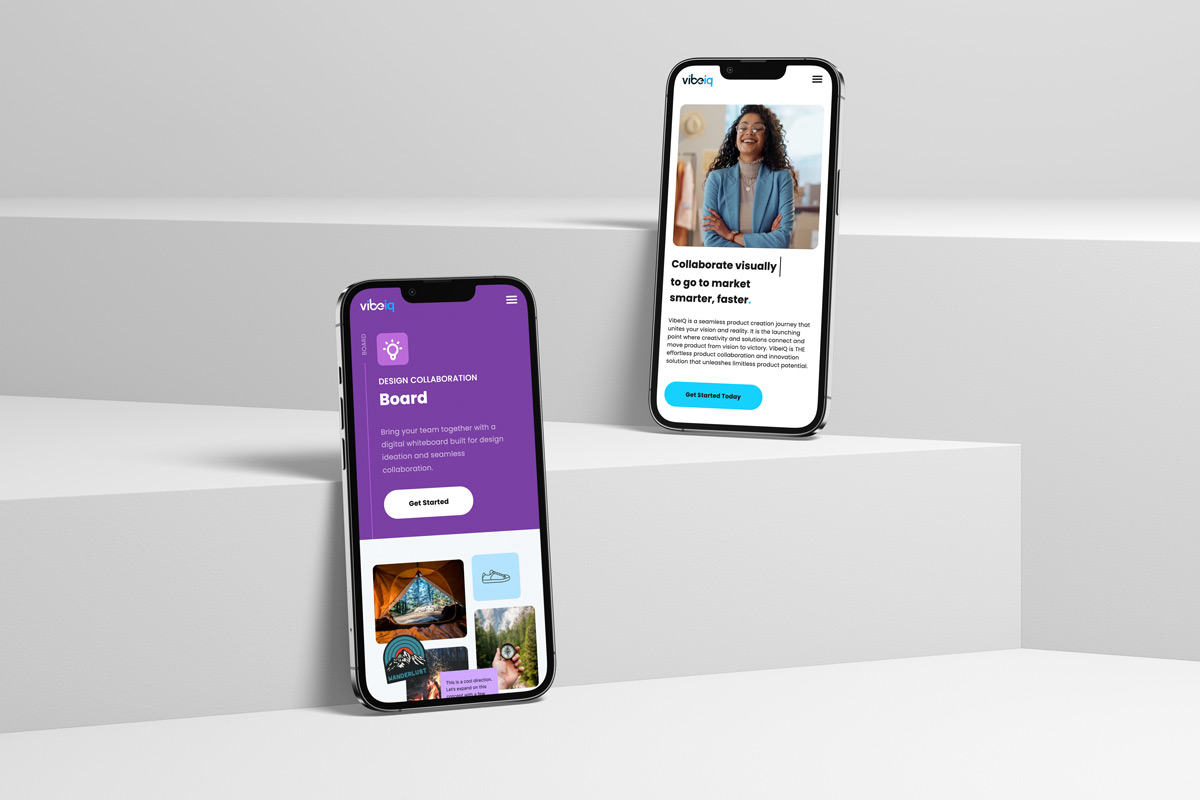

PLM’s rigidity became especially problematic in the area of creative design and merchandising in which processes rely heavily on experimentation and visual collaboration. Ask any designer their opinion of PLM and you’ll probably get the unanimous reply that PLM “stifles” their creativity. To merchandisers, PLM lacks fluidity, shareability and assortment visualization capabilities. As a result, they must resort to spreadsheets, powerpoints and offline communication to do their job.

This highlights the uncomfortable truth with monolithic PLMs: to many users, they are often seen as the rigid “system of record”, where data is captured but never where teams collaborate, ideate and make decisions.

Towards a new paradigm: the GTM Platform

The future of monolithic PLM is uncertain, however there’s one thing we know for sure: technologies that will enable retailers to unlock a greater level of agility and collaboration within their go-to-market processes will prevail. This points logically to cloud-native platform architectures which have already revolutionized the digital commerce space. These platforms should not only cover the scope of PLM but also have the opportunity to include a broader set of stakeholders and processes to cover the entire go-to-market (GTM) landscape. Let’s call them GTM platforms.

The main characteristics of GTM platforms is that they will be designed as API first microservices that will allow for flexibility and interoperability between back-end logic and end user applications. These microservices will enable a variety of specialized apps to interact with business entities – such as products, assortments and component libraries – independently from each other. This architecture will make it possible to develop a new breed of lightweight front-end applications with world class user experience and collaboration capabilities.

No longer will organizations be tied to a single technology stack, therefore reducing vendor lock-in. Rather, they will be able to mix and match and orchestrate various capabilities to support diverse omni-channel and category-specific processes. In theory, a brand could use a combination of apps from multiple software vendors, internally developed, as long as these can communicate through APIs within a GTM platform.

GTM platforms will enable a much greater level of flexibility in how brands design their future technology stack(s). They will be the technology enabler that spur a new era of innovation in the “pre-commerce” space. More importantly, they will empower organizations to achieve true agile go-to-market.

Conclusion

One of the silver linings of the pandemic is that it helped expose the gaps and limitations in the tools and processes that have been the status quo over the past decades. It also helped shed a light on the unsustainable nature with which our industry has been developing products and going to market with them. To many retailers, the experience of having to work remotely over the past 18 months has highlighted an undeniable fact: digital ways of working are the norm. Organizations that will be able to achieve agility by harnessing new innovations in platform architecture and collaboration tools will undoubtedly gain competitive advantage. For the others, they will have to stick to paper cut dolls, foam core boards and physical showrooms which already seem like artifacts of a bygone era.